It’s about time we added a build tool to the project. It’s possible to create jars by hand, but that soon becomes time-consuming and error prone. Having a repeatable build process launched with a single command is pretty much essential to doing anything interesting with software.

Over the years I’ve used make, ant, maven and gradle. The one of these I like least is ant. It seems to produce massive, thousand-line monstrosities that are unreadable and inscrutable. And while ivy is fairly similar to maven’s dependency management, it doesn’t seem as natural to me. Having said that, maven can also get unwieldy, with simple builds that get out of hand.

I’ve not used Gradle a great deal, but it seems an obvious choice. A significant reason is its success – Gradle is the standard tool for Android Studio and Spring. Popularity is often under-rated as a reason for choosing tools or frameworks, but means examples and expertise are easier to find. There may be many good reasons for lesser-used frameworks, but knowing there is a vibrant community around a platform is a major plus.

However, I’m still cautious about Gradle. I’ve found some of the plugins I’ve used unhelpful, with the missing options harder to find than they were with maven. I also find the documentation focuses too much on how to do certain tasks rather than explaining the underlying concepts and assumptions. On top of that is a growing suspicion that Groovy may result in scripts that are write-only, impossible to read back later on, just like Perl scripts used to be.

(There’s an example in the documentation of the power of dynamically-generated tasks and their potential for chaos. The script

4.times { counter ->

task "task$counter" << {

println "I'm task number $counter"

}

}

creates four tasks, which can then be called as

> gradle -q task1 I'm task number 1

I can see some powerful uses for this, but I can also see myself struggling to work out where on earth a failing task comes from)

Despite some teething problems with the Artifactory plugin at work, I’ve enjoyed using Gradle so far. I love groovy for its concision and charm and there’s an optimism to using a new tool, particularly when the documentation explains how much better it is. It may turn out that maven would be a better choice but, because we’re working on infrastructure rather than code, we should have a lot more freedom to change things later.

Gradle uses the same concept of configuration over convention as maven. Past experience tells me that it’s easy to work with the grain of such things that fight the tool, so we will move our source directories from src/ to src/main/java/ in line with this.

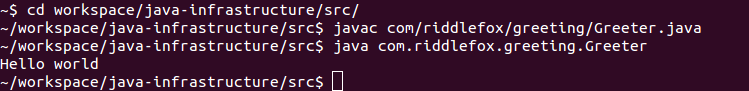

Because we’ve used the standard directory layout, the initial build script is extremely simple. In fact, it’s just a single line in our initial build.gradle file:

apply plugin: 'java'

Running the command ‘gradle build’ results in the jar file being built. Nice and straightforward – but I feel a slight sense of nervousness that so much happens with a single command. For example, if we had not moved the source directories, gradle would still happily produce a jar file, just one with nothing in it.

Introducing a new tool means something else to track. As well as noting the current version in the readme and todo files, Gradle also offers a mechanism for reducing the risk of different versions being used – the Gradle wrapper. This is a script that checks whether the required version of Gradle is available on the local machine. If not, the version is downloaded and stored locally. This requires us to add a new gradle wrapper task to the script, then execute the gradle wrapper command.

task wrapper(type: Wrapper) {

gradleVersion = '2.11'

}The wrapper adds several new files – gradlew and gradlew.bat scripts, as well as a jar file and configuration in the gradle/wrapper folder. This is intended to be commited to git, so that anyone building the project in future can use the correct version of gradle via the gradlew command. This version is downloaded and stored centrally so that it can be used by other gradlew scripts as needed.

However, this convenience introduces a new issue, one we will face again when we introduce dependency management: how do we make sure that the code we download is safe? There’s an interesting discussion of risk in a post called How to Take over the computer of any Java developer. Basically, we need to make sure that the code we download has not been tampered with.

A basic level of security is provided by the distributionSha256Sum property which is added to the gradle-wrapper.properties and checks that the zip file downloaded from http://services.gradle.org/distributions/gradle-2.11-bin.zip is the one expected. Of course, this in itself requires finding ” the SHA-256 hash of a known Gradle distribution”. We’d probably be OK in trusting the (HTTP) download, but this isn’t really good enough. It’s going to be added to the TODO list, and dealt with after we’ve looked at dependency management.

The latest git commit is cd8e97a. In the next part we’ll look at adding a continuous integration server.